Screenshot from Gateway II: Homeworld

While it is valuable to explore interactive fiction in terms of its content--to conceptualize it in terms of game or narrative or puzzle or riddle--works of IF are computer programs first and foremost. While an IF author hopes that the program's user interface is transparent enough to "fall away" and let the user engage directly with work's content, this is not always the case.

IF's traditional user interface provides a simple command line prompt at which the user can type what they want to do in natural language. Often, these are simple imperative commands, such as look or wait or get lantern. Or they can be much more complicated, such as tell sally to take the broken glass and put it into the red bin.

However, while the potential variation in natural language utterances is incredibly broad, works of interactive fiction are necessarily finite in scope. When the interface suggests that any logical command will do--yet the system itself is authored to handle only a subset of what the user might think to try--there will eventually be a jarring disconnect. This disconnect between a user-entered command and system-supported interpretation can occur for a number of different reasons.

First, the IF parser might support the particular action that the user is attempting but simply lack the particular word form or synonym that the user is giving. For example, the user might enter the command chat with the stable boy and get an error message: I don't know the word "chat". The user's goal here is to have a conversation with the stable boy. The game may well support the action of conversing with other characters, and it might map a number of different synonyms--such as talk, gossip, and converse--to this same basic talk verb. But because the game lacks the "chat" synonym, the user's command fails.

This same synonym problem affects nouns as well. For example, if an IF author neglects to indicate that a stone bust can also be called a "statue", the command get statue will fail while get stone bust will work.

Similarly, the parser may support only certain grammatical structures. Give egg to troll might work, while give troll egg or even give troll the egg may fail.

In addition to such syntactic mismatches, an IF parser can fail at a semantic level. The easiest way this can happen is if the IF world does not support the given verb under any synonym. For example, a player might want to repel the vampire, but this repel action is simply not possible in the game if the IF author has not written the code necessary to support it.

Similarly, objects that should logically be present or that are even mentioned briefly may not actually be modelled in the virtual world. For example, the player's character might be standing on a busy urban sidewalk with a urgent package to deliver under one arm. Yet if the player pauses to type look at street, she might get the response You see no such thing. Logically, if there is a sidewalk, there should be a street, but the author may not have modelled it explicitly. This disconnect can be compounded if the author actually mentions traffic, cars, or even the street itself in the description of the location.

This failure can be slightly less jarring if the error message is crafted in such a way that the IF parser appears to recognize what the player meant but is simply reporting that the player is exploring in an unsupported direction. In the example above, the parser could instead reply with The street is unimportant or You do not need to refer to the street in this game. The edges of the game are still revealed to the player here, but not as sharply. It implies that the street was not forgotten by the author, but deliberately not implemented.

As with objects, there is a range of ways to recognize but still not support particular user actions. One is to provide only illusory success: some outcome that has no effect on the game world. For example, if the user types jump, the reply might be You jump up and down on the spot. The system has recognized and accepted the user's command, but, beyond the few lines of code needed to provide this short response, the IF author does not need to implement any world state changes that result from jumping.

Alternatively, an IF system can refuse a player's request. Suppose the player types get statue. If the statue is simply decoration and not essential to the author's story, the author will not want to spend time modeling all of the possible interactions between this statue and other objects and locations in the game. There is no obvious way to provide illusory success here: either you can carry the statue off with you or you can't. This means the author has to refuse the player's command. This can be a blunt refusal: You cannot get the bust. It could accept that the attempt makes sense, but explain why it fails, either for a physical reason (The stone bust proves too heavy to lift, let alone carry around with you) or by reinterpreting the player character's motivation (Your troubles are heavy enough. You decide not to add the extra burden of the stone bust). Finally, some refusals may be hints that the action is possible but by a different means. For example, the refusal You can't reach the stone bust from here implies that it might be possible to reach the statue later given the use of a stool or ladder or rope.

An IF author has a particular story and world in mind. Given her limited time and resources, she will focus on authoring objects and actions necessary to that story. However, when this finite game scope is paired with the apparently unrestrained user interface of a natural language command line interface, the user will eventually attempt an interaction that is beyond that scope. If this disconnect occurs at a syntactic level, the parser will be unable to make sense of the command at all, even if it would have understood synonymous commands. Even if the parser can make sense of the command, it may still need to be refused more or less elegantly at the semantic level, unless the author is willing to spend the time and energy to fully support and debug all the possible outcomes of supporting the action. For this reason, much of an IF author's time goes into crafting humorous or gentle refusals, attempting to provide hints that guide the user back to the actions and objects that are actually important to the game.

This disconnect ultimately stems from the opaqueness of the interface. A simple text prompt suggests that it can accept a free range of input. However, it does not indicate the supported command grammar. It does not indicate the valid synonyms for either verbs or game objects. An IF game's description can often fail to indicate which objects support a wide range of actions versus those that don't.

A different UI could resolve many of these limitation by clearly indicating interactive objects and the exact range of supported actions for each, all without the possibility of syntactic input errors.

Clear affordances have been the foundation of human-computer interaction (HCI) and user interface design for decades.

The concept started with psychologist James Gibson (1977). For Gibson, an affordance is an "action possibility" offered by particular features of the environment. For example, a smooth clean level surface a couple feet off the ground affords sitting to most humans. If the surface has a long smooth edge, it might also afford grinding to a teenager with a skateboard. Although an affordance is a relationship defined by the capabilities of a particular actor, Gibson still considered affordances to be based upon objectively measurable features. The environment could still afford a given use even if this potential went unperceived and unused by a given actor.

In 1988, Donald Norman (2002) applied this concept to interaction with human-constructed objects. From here, the concept was taken up by HCI. Norman focuses much more on perceived affordances. That is, due to an actor's capabilities, goals, plans, or past experiences, the environment can suggest some possible actions more strongly than it does others. This emphasis on perceptibility is valuable to system designers, since a possible-yet-unperceived use of an object or the environment is ultimately of little practical utility. This is the sense of affordance that I will use here.

Norman suggests that there are seven stages to performing a significant action in the world. The actor 1) forms a goal, 2) breaks this goal down into specific intentions and 3) translates those intentions into necessary actions in the world that are then 4) executed. The actor then 5) perceives and 6) interprets the state of the world in order to 7) evaluate the outcome relative to their original goal.

A system's affordances play an essential role in this action process. The actor must understand the general function of a given device, recognize how this relates to her goals, and then determine which specific actions are possible with it as related to her intentions. If the actor's intentions cannot easily be mapped to actions supported by the system, there is a significant Gulf of Execution. Similarly, if it is not clear what effect a given action had on the system, there is a significant Gulf of Evaluation.

Norman's approach to affordances has been applied specifically to the domain of interactive drama. By blending the narrative poetics of Aristotle with the concept of affordances, Mateas (2004) proposed a model of user agency for interactive drama. Specifically, the various possible actions a user might make provides the "material cause" for a user's interactions. At the same time, the context of the story-so-far provides suggestions as to what goals or intentions might be most appropriate. These story constraints provide a "formal cause". Mateas proposes a user will feel the most agency when these two causes are balanced--they have one or more goals in mind thanks to the story context, and the interactions possible in the virtual world sufficiently afford the means by which those goals might be realized.

Tomaszewski & Binsted (2006) expanded this model to multiple levels of narrative. For example, it is possible to differentiate world-level from story-level agency. World-level agency is the ability to successfully interact with the virtual world. The game's medium provides a representation--such a text description or graphical depictions--of that virtual world. From this raw material, the user can mentally construct the existence of particular game world objects. The player's constructed model of that virtual world then provides the context and constraints for determining which object interactions should be possible.1 The game's medium also affords the input controls by which the player can then effect those intended actions.

Such world-level agency is then a prerequisite for any story-level agency. For example, a story's context might provide the player with the story-level goal of courting another character. The player's conception of the world objects and the actions possible with them then provide her the material for such action. For example, she might try to compliment the other character in conversation, give him a gift, or defend him from the attacks of other characters. To do so, the player would then need to exert her world-level agency to actually perform one of these specific actions in the game world.

Some of the challenges users face when dealing with traditional command-line input in a story-oriented or textual virtual world have already been empirically examined to some extent.

In Tomaszewski (2011), I evaluated an IF-based interactive drama. 24 participants completed the study. Despite each player completing a 10-minute in-world IF tutorial before playing their first recorded session, 20% of entered game commands failed to produce a valid response from the system. In open comments, 25% of participants listed the command-based input system as the least enjoyable aspect of the game, while only one participant mentioned it as the most enjoyable aspect.

Mehta, Corradini, Ontañón, and Henrichsen (2010) explored the tradeoffs between graphical and text media for game output and between menu and natural language modalities for input. To do this, they used the same portion of Anchorhead used in earlier DODM research (Nelson 2005, Sharma 2010). Their study did not clearly separate these two concerns, however. They tested a graphical version of the game with natural language input against a text-based version with menu-driven input. When examining the qualitative responses of 30 participants, they concluded that the natural language input was more natural, leading players to feel more immersed and engaged. However, this input modality also "made it difficult for some users to figure out appropriate actions". It also created "a false illusion" on what range of input the system could handle without generating errors, which led to some user frustration.

Sali et al. (2010) examined the effects of different input systems for character dialog within graphical games. Specifically, they looked at using a sentence-selection menu (where a character's exact words are shown), an abstract response menu (where the general sentiment or goal of an utterance is given), or natural language input. Using the same underlying game (Mateas and Stern's Facade), they implemented the three different input systems. The two menu-based systems would pause the game, while natural language input had to be entered in real time. Based on the semi-structured interview responses of 35 participants, they examined a number of different factors.

Participants were fairly split between whether natural language or one of the two menu systems led to more engagement. Some preferred the low-level control of being able to choose their own words, while others appreciated the high-level control on the game offered by choosing well-crafted and effective utterances. The natural language input system was clearly deemed the most difficult to use and the one that offered the least control. However, it was also deemed the most enjoyable by half of participants, with 49% of participants preferring it over either of the two menu systems.

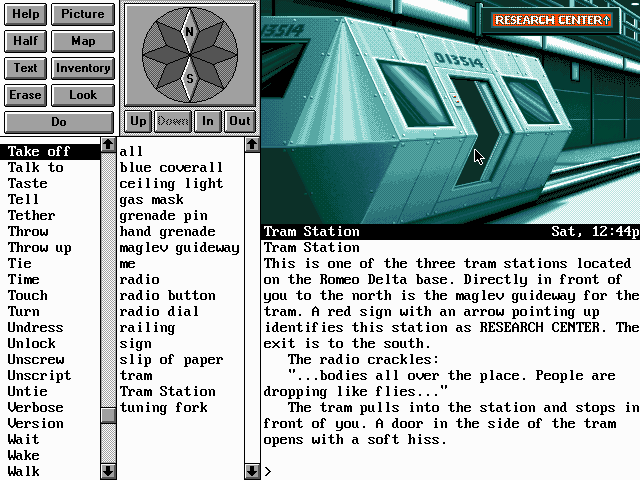

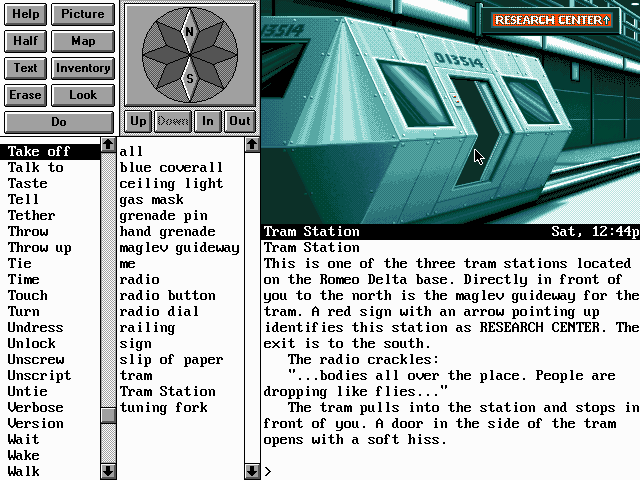

In the late 1980s and early 1990s, Legend Entertainment tackled some of the affordance issues inherent to the traditional IF interface. Their games were clearly interactive fiction, but with many graphical additions. Some games included video cut-scenes or animated images of the current location. In terms of the UI, most Legend games included a location image, a compass rose, and menus of possible actions and available objects. All of these additional UI components could be interactively clicked to perform certain actions.

My experience of these games was that the extras were a bit like training wheels: a good way to learn what's available, but eventually I left them behind and relied on purely textual input, which was much faster. The compass rose was particularly useful, but the menus generally involved too much scrolling.

Various interactive fiction authors have continued to experiment with different IF user interfaces, often blurring the boundary between interactive fiction and hypertext fiction, as well as adding other novel textual effects. For example, some have used menus for conversations, where users select a particular utterance. Some have added hypertext links for a subset of possible actions, or have suggested a subset of possible actions as hyperlinks while still leaving the command line free for other options.

Most of these experiments have be specific to one or two particular games, however, rather than a generic solution that could be easily reused for a wide variety of IF games. Furthermore, to my knowledge, none of them have been empirically analyzed regarding their effectiveness or their effect on gameplay across different games.

|

Argax Project : Thesis :

A Rough Draft Node http://www2.hawaii.edu/~ztomasze/argax |

|

Last Edited: 31 Jan 2015 ©2013 by Z. Tomaszewski. |